- Joined

- Aug 2, 2016

- Posts

- 1,611

- Solutions

- 4

- Reaction

- 635

- Points

- 528

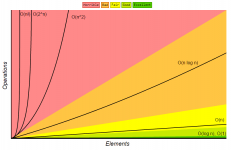

Introduction to Algorithm Complexity and Big-O notation

Introduction:

It is common for coders to jump straight into writing code, without thinking about the best way to do things. There have been many alternative algorithms written for common things, such as sorting lists, and some are much better than others. This tutorial is designed to introduce you to algorithm complexity analysis, and the big O (pronounced "big oh") notation. This will help you understand more about the best way to write algorithms to minimize time and memory usage.

What is algorithm complexity, and why should I care about it:

Algorithm complexity is just a way to formally measure how fast a program or algorithm runs. We cannot just write the code and time how fast it runs in practise, because many things affect the runtime in practise (such as the processor you are using, RAM, bus speeds, etc). So algorithm complexity analysis is designed to compare algorithms in theory, to see which is the most efficient.

It is important to be aware, at least partially, of different algorithm complexities so you don't end up accidentally writing algorithms that take 10 times longer than necessary. Even if you don't need to be able to recognize instantly whether an algorithm is linear time or exponential time, this tutorial should give you an idea about which is which, because such things will become very important when you are dealing with massive amounts of data or large numbers.

Terminology:

Fundamental Instruction - the simplest operations, for example assigning values to variables, comparisons, retrieving values from arrays, basic arithmetic (addition, multiplication, incrementation, etc). Don't worry, we aren't just going to count these.

Worst case analysis - pretty self explanatory, this just means that if we have an algorithm, we analyse it by assuming we are as unlucky as possible with the input (i.e. the input that will cause the algorithm to do the most work)

Asymptotic Behaviour:

An asymptote is something which occurs when a function gets closer and closer to a value as its input changes, but never quite reaches it. We can use this by only keeping the largest growing part of the function, because that will have the most influence on the output as it gets large. We also ignore any coefficients. For example, 3n + 2 becomes just n, and 2n^3 + 4n + 2 just becomes n^3. This is a little bit of maths involved, because you need to understand what functions grow faster than others. Example: 1 < sqrt

This site provides a great reference sheet for comparing the complexities of different common algorithm implementations: You do not have permission to view the full content of this post. Log in or register now.

Complexity in code:

Now that we understand what asymptotic behaviour of functions is, we can start looking at some code to find the complexity of the algorithms in practise. The biggest thing we look for in code is Loops, because loops are the things which will cause the fundamental instructions to be repeated over and over rather than a constant amount.

A function without any loops is constant, i.e. f

Example:

Code:

int x = 1;

int y = x + 2;

if(x == 1) {

System.out.println(x);

1}A function with a single loop that loops n times is f

Example:

Code:

int n = 10;

for(int i = 0; i < n; i++) {

System.out.println(i + x);

}in this loop, each time the loop occurs it executes multiple fundamental instructions e.g. comparison i < 2, incrementation i++, addition i + x, etc. but these are constant each time, so it is ignored - the number of times the loop occurs is the biggest influence.

A function with nested loops, e.g. a loop inside a loop, is just a power of n.

Example:

Code:

int n = 10;

for(int i = 0; i < n; i++) {

for(int j = 0; j < n; j++) {

System.out.println(i + j);

}

}By the same logic, a loop inside a loop inside a loop is f

Big Ɵ (Big Theta) Notation

Up until now we have been using f

if f

This is known as Big-Ɵ notation. (The character Ɵ is the greek letter theta.)

This notation is used when we know exactly the complexity of a function. But sometimes we may not know exactly what complexity it has, only a maximum / minimum complexity. So we then introduce 2 new notations - Big O and Big Omega notations to provide a maximum and minimum complexity.

Big O Notation

Big O notation is like a maximum complexity we know that a function has. All we know, when we use this notation, is that the true complexity (Big Ɵ) is the same of less than the Big O complexity. Technically, we say that O(f

Usually we can never actually know if we have found the most efficient algorithm for a particular task, for example the most efficient algorithm to sort a list into order, so we use the Big O notation on existing algorithms to show that the most efficient solution is either the same or better than the existing ones.

Example of a sorting algorithm in Java, this is an implementation called the BubbleSort:

Code:

int currentMinIndex = 0;

for (int front = 0; front < intArray.length; front++)

{

currentMinIndex = front;

for (int i = front; i < intArray.length; i++)

{

if (intArray[i] < intArray[currentMinIndex])

{

currentMinIndex = i;

}

}

int tmp = intArray[front];

intArray[front] = intArray[currentMinIndex];

intArray[currentMinIndex] = tmp;

}We can see that this implementation has a loop inside a loop. As we saw, if we have a loop that iterates n times inside a loop which iterates n times, then we have f

Other Notations

There are actually 5 unique sets of functions that you might see. These are:

o(f

O(f

Ɵ(f

Ω(f

ω(f

Special thanks to MeshCollider

Attachments

-

You do not have permission to view the full content of this post. Log in or register now.